What’s Wrong with NEOWISE

In a 2016 preprint and two papers published online in December 2017 titled “Asteroid thermal modeling in the presence of reflected sunlight” and June 2018, “An empirical examination of WISE/NEOWISE asteroid analysis and results” in the journal Icarus, I raised a number of scientific, methodological, and ethical problems with the NEOWISE asteroid research project and its published papers and results. In this article, I summarize the problems in a less formal way than the papers, and I explain how the scientific misconduct that I found can be verified by anyone. Unlike a formal research paper I can also include the NEOWISE group’s response; these are shown to be specious with similarly simple methods. This is a follow-up to my earlier article “A Simple Guide to NEOWISE Problems,” which I posted to Medium two years ago.

The NEOWISE research project was funded by NASA and based at its Jet Propulsion Laboratory (JPL). The project used infrared (IR) observations of asteroids made by the NASA WISE spacecraft mission to estimate the physical properties of asteroids: chiefly the diameter D, but also the albedo (reflectivity) in visible light pv, and the albedo in IR light pIR. These parameters are estimated by fitting a model (i.e., by fitting a curve) to the observational data, which in the case of NEOWISE is the amount of IR light flux in each of four bands W1, W2, W3, W4, each of which measures different wavelengths of IR light.

NEOWISE observed vastly more asteroids in the IR than all previous studies combined. No currently scheduled or proposed mission will observe a comparable number of asteroids in four IR bands, so the WISE/NEOWISE data set is the best information on asteroid sizes and albedos that science will have for many years. As a result, it ought to be a treasure trove of information about asteroids that is widely used by the community with full confidence in its reliability and a full understanding of how the numerical results were derived.

In order to assess the accuracy of the asteroid diameters that NEOWISE estimated, the established method is to compare the diameters obtained by thermal modeling to diameters determined independently for the same asteroids by other methods — notably by bouncing radar beams off them, by observing them from spacecraft, and by timing the dip in starlight that occurs when an asteroid directly passes in front of star (a so-called occultation). For simplicity, let’s refer to these gold-standard radar, occultation, and spacecraft measurements as ROS diameters. The NEOWISE group did compare model diameters to ROS diameters in this way in a preliminary calibration paper in which they analyzed and criticized previous work, including estimates made using the IRAS space telescope and estimates published by Ryan and Woodward. But if the NEOWISE team ever performed this obvious, reliable test for accuracy on their own results, they didn’t publish the outcome. Instead, they claimed great accuracy without much support. They they simply copied the ROS diameters out of previous papers by others and presented them as NEOWISE modeling results, making it impossible for third parties to check the actual accuracy.

The problems I have identified with NEOWISE can be divided into three categories:

- scientific errors in the NEOWISE papers published from 2011 through 2014,

- scientific misconduct evident in those papers,

- and further misconduct, apparently to cover up the issues, which occurred after I first pointed out these problems in a preprint article in 2016.

Virtually all scientists abhor the idea of misconduct, so I do not make this charge lightly. What I mean by “misconduct” are actions that violate common standards of the ethical practice of science. These includes ethical guidelines for statistical practice (e.g. guidelines adopted by the American Statistical Association), as well as the ethical standard of scientific journals and institutions. NASA has its own regulations about research misconduct . The most basic of these rules can be summarized very simply:

- don’t present other people’s work as your own,

- describe the methods used to get the results so others can replicate them,

- and avoid anything that is misleading or deceptive to readers.

It’s important to keep in mind that misconduct of these kinds can and does occur unintentionally, just as customers sometimes inadvertently take goods out of stores without paying. That’s still shoplifting, even if the intent was not to steal, and it’s wrong. Similarly, publishing misleading information in a scientific paper is wrong, regardless of what led to it. The appropriate response for an author is to publicly acknowledge and correct the misstep immediately, retracting the paper if the misconduct affected the results in a material way.

As it happens, my recent papers and other publications have documented strong evidence — including statements by some NEOWISE researchers themselves — that the issues I am calling misconduct in the NEOWISE papers were not inadvertent. They appear to have been deliberate choices made repeatedly by the NEOWISE team over a long period of time.

These actions have caused the astronomical community to work under the false belief that the NEOWISE results are more accurate (have smaller errors) than the evidence warrants. They have also allowed the NEOWISE group to effectively monopolize the use of the asteroid diameter and albedo data, which is required by both NASA policy and scientific norms to be shared openly with the scientific community.

Since I first brought these issues to light, the NEOWISE group has reacted extremely deceptively. To return to the shoplifting example, it’s like they claimed they did pay when confronted, and then bolted out the door. Had the NEOWISE team shown any good intentions whatsoever in this matter when I initially raised the issues, I would now be pursuing this matter quite differently. But further acts of misconduct seem to have been committed to cover up the original issues, suggesting that at least some of the researchers involved have been acting in bad faith.

Below I summarize the problems briefly. I then provide more detailed explanation and evidence for each in turn. While the scientific errors require some detailed arguments and calculations (which can be found in my two Icarus papers), most of the misconduct problems — including the worst ones — can be easily verified by anybody; instructions below show how. Those matters generally don’t require detailed scientific knowledge or subjective judgment. All one need do is to compare two numbers or two sorted lists to see whether or not they match up.

Scientific and methodological errors (2011 to 2014)

The worst examples of scientific error in the NEOWISE papers, all quite serious, are listed below. My two Icarus papers and the Notes section below discuss them in more detail.

1. Violation of Kirchhoff’s law of thermal radiation. The NEOWISE analysis violates this fundamental and simple law, which is taught in every freshman physics course.

2. Incorrect albedos and diameters. A simple formula defines the mathematical relationship among visible-band albedo, absolute visible magnitude, and diameter. This formula is listed in each of the NEOWISE result papers. Yet about 14,000 of the NEOWISE results violate the formula.

3. No proper error analysis. A basic part of any scientific study of this kind is to analyze the various sources of error in the results — that is called error analysis. In addition, one would like to compare new results to those previously obtained by using other methods, which could be called accuracy analysis. The NEOWISE error analysis is very inadequate (more on this below). The accuracy analysis is essentially non-existent.

4. Nonstandard and unjustified data analysis. The NEOWISE data analysis relied heavily on nonstandard methods of several kinds. Some of these methods involved discarding much of the observational data. The NEOWISE papers give no valid reasons for discarding that much data.

5. Exceptions to data-processing rules. In multiple instances, the NEOWISE papers describe an approach to data analysis — for example, an assumption that they employ for a group of asteroids — that upon inspections turns out not to have been reliably followed. In addition to using many ad hoc rules, NEOWISE seems to have broken many of these rules in numerous undocumented exceptions.

6. Underestimated observational errors. A fundamental input to the NEOWISE analysis is the estimated observational error in flux. Hanus et al. (2015) showed that the WISE/NEOWISE analysis systematically underestimated the errors. They found that the true errors were at least 40% larger than claimed in the W3 band and 30% larger in the W4 band.

My paper corroborates those findings and, using a much larger sample of WISE data, shows that the errors were 150% larger in the W1 band and 50% larger in the W2 band than previously claimed. This finding invalidates the NEOWISE error analysis and has an effect on every NEOWISE result.

7. Poor-quality model fits. Many NEOWISE results fit the data very poorly. In some cases, the fit is so bad that it the result appears to have been effectively fabricated. In other cases, the fits are unnecessarily poor.

Deception and misconduct, part I: The original papers, 2011 to 2014

The initial round of NEOWISE papers contain the following examples which breach the normal rules of science. Here is a list of the worst offenses.

8. Irreproducible results. The NEOWISE papers do not describe their methods in sufficient detail to allow them to be replicated (i.e., to perform the same calculations on observational data and get the same results). NEOWISE team leaders have consistently refused to explain these details to any external researchers in the field outside the NEOWISE group.

This is a clear violation of the normal practice of science. It is also a violation of NASA and JPL rules regarding disclosure of relevant science to the scientific community. One can speculate on at least two possible motives for the secrecy: it could be to prevent discovery of other problems discussed below, and it might also be intended to allow the NEOWISE group to monopolize the data set so that they can dominate the field in this area.

9. Exaggerated accuracy. The NEOWISE papers repeatedly and systematically exaggerate the level of accuracy in their diameter estimates. The initial NEOWISE paper on accuracy claims (erroneously, as it turns out) that the minimum systematic error is 10%. Subsequent papers make far more aggressive claims, however, such as “Using a NEATM thermal model fitting routine, we compute diameters for over 100,000 main belt asteroids from their IR thermal flux, with errors better than 10%” (Masiero et al. 2011). Yet that comment is not supported with independent analysis; instead, they simply reference the previous paper that claims a minimum error of 10%. It is unethical to make unsubstantiated accuracy claims and to falsely reference papers in a deceptive manner.

10. Conflating accuracy across multiple models and data. The NEOWISE papers use 10 different models and 12 different combinations of data from the W1 through W4 bands, with 47 different combinations of models and bands. Each combination ought to have different accuracy and error properties, yet the information as to which result was calculated with each combination was not disclosed until 2016. It is deceptive to claim an overall accuracy based on a best-case model and to imply that this is typical of all of the model/data combinations.

11. Copied ROS diameters presented as NEOWISE results. In more than 100 cases, the NEOWISE group intermingled previously published ROS diameters for asteroids in tables of NEOWISE model-fit diameters, without explaining that they were doing so or referencing the sources of the copied diameters. That is plagiarism. It also created a false impression that NEOWISE had excellent accuracy, and it prevented any third party from making their own assessment of the accuracy.

12. Unfair criticism of work by others. One NEOWISE paper (Mainzer et al. 2011c) compared ROS asteroid diameters to model-fit diameters from two other research projects (IRAS and Ryan and Woodward). Based on this comparison, the NEOWISE group criticizes those earlier studies for being “biased,” and argued that NEOWISE is superior. Yet they failed to publish the same comparison for NEOWISE diameters. Furthermore, they made it impossible for third parties to make this comparison by copying the same ROS diameters, and instead misrepresented those measurements as model fits.

Adding insult to injury, the group has made it impossible to replicate their calculations by refusing direct requests to share required details. It is unethical for the NEOWISE team to criticize other scientists results on the basis of a metric that they did not apply to their own work and rendered impossible for others to apply to their results.

13. Fabricated results. The NEOWISE model curves completely miss all of the data points they claim to fit in 30% to 50% of cases, depending on band and model combination. In effect, these results are fabricated — they clearly do not depend on the data and instead are an artifact of the nonstandard analytical approaches the group used. These results were nevertheless presented as best fits to the data.

Beyond the complete misses, many other curves are very poor fits. The NEOWISE team must have known this; a failure to disclose such a serious issue is unethical. It should go without saying that the quality of model fits is an absolutely essential part of the presentation of results from a study based on model fitting.

Deception and misconduct, part II: The cover-up, 2016 to present

From June 2015 through May 2016, I made the NEOWISE group aware of the Kirchhoff’s law issue. Amy Mainzer replied that I was confused; after that, I received no further comments from her. Undeterred, I periodically sent drafts and updates of my findings to Mainzer, as well as to Edward Wright at UCLA (who is affiliated with NEOWISE) and to Tom Statler of NASA (who is not part of NEOWISE). Wright and Statler did reply to some emails, but they were unable to answer most of my questions.

During this period, I repeatedly sought comments or clarifications. The NEOWISE group had every opportunity to clarify, explain, or show me that I was wrong. Instead they simply refused to answer.

On May 20, 2016, I posted a draft manuscript on arXiv.org (https://arxiv.org/abs/1605.06490v2). This preprint service is commonly used by scientists, especially in physics and astronomy, as a way to get comments and feedback from the scientific community on preliminary results prior to submission for formal peer review.

It was then clear to me that NEOWISE would never respond to private questions. My hope in releasing the manuscript was that it would yield some answers to the questions I had posed to the NEOWISE group — either from them or from others in the field. This preprint, and an earlier paper of mine was covered by the New York Times on May 24, 2016, and subsequently by other media outlets.

14. Failing to address the issues raised. When my preprint went online, NASA responded by attacking it in a press release. Dr. Amy Mainzer, the principle investigator (PI) of NEOWISE, and Dr. Edward Wright, the PI of the related WISE mission, issued statements to the press. None of these statements actually addressed the issues I had raised, however. Dr. Mainzer sent a written statement to The New York Times that defended the use of copied ROS diameters — but not in the papers that I had called into question. Her defense referred only to an earlier paper (Mainzer et al., 2011c) where ROS diameters had been used in a (mostly) transparent way; I had not voiced any concerns about that paper. No mention was made of the papers that were actually accused.

Dr. Mainzer and Dr. Wright made other false statements as well, like arguing that the violation of Kirchhoff’s law was to be expected because the NEATM model they used also violates the conservation of energy — which is completely false. Offering false defenses and red herrings is deceptive; it is the antithesis of an open and transparent response to criticism. See below under NEOWISE response 1 to: Plagiarism of ROS diameters.

15. Defending copied diameters falsely. In May 27, 2016, on the Yahoo minor planets forum, Joseph Masiero of JPL posted to a discussion thread about my preprint in response to questions posed by Jean-Luc Margot, a professor at UCLA. Margot asked Masiero specifically about point 11 above: the copying of ROS diameters and presenting them as NEOWISE results in one of the accused papers, of which Masiero was the first author. Masiero replied that this was done deliberately and argued that it was valid. He claimed that it was fully referenced in the papers.

The arguments that Masiero raised in that post are easily shown to be false. Masiero’s public admission is irrefutable evidence that the use of copied diameters was deliberate. This is discussed in detail below, with links to the original thread under NEOWISE Response 2 to: Plagiarism of ROS diameters.

16. Fraudulent backdating in an official NASA database. In March 2016, the NEOWISE group began submitting the collected results from NEOWISE papers published between 2011 and 2014 to the NASA Planetary Data System (PDS), an official repository for peer-reviewed scientific results. The latest file dates on the system show that the last change to the NEOWISE data sets in the PDS was made in June 2016. Joseph Masiero was the lead author for the PDS submission.

The files associated with this data archive state clearly and repeatedly that the data in the PDS archive are simply the NEOWISE results that had been previously published in the Astrophysical Journal (ApJ). This is false, however. As part of my independent examination of the NEOWISE papers and PDS data, I discovered that the PDS archive was radically modified from the original publications. Additions to the PDS archive are peer reviewed, but by falsely labelling their results as all identical to previously published figures, the NEOWISE group was apparently able to evade scrutiny.

Misrepresenting the source of results during peer review is unethical and a serious disservice to any scientific user of the PDS archive. It also poses a practical problem for astronomers who based their own analyses on prior NEOWISE papers. This is covered in detail below in How to check: Fraudulent backdating of results in PDS archive.

17. Purging results with copied ROS diameters from the PDS archive. Although documentation for the NEOWISE upload to the PDS state that it compiles diameters and other results from the group’s 2011–2014 papers, all of the 100+ asteroid results that had been copied from ROS papers in the largest NEOWISE results paper (Masiero et al. 2011) were omitted from the PDS archive. To cover this up, new results were introduced with reference codes that cite Masiero et al. 2011, or the follow-up paper Masiero et al. 2014, as “the original publication of fitted parameters” — yet those results never appeared in either of the two papers. The origin of these new results has not been disclosed. See below under How to check: Fraudulent backdating of results in PDS archive.

18. Defending copied ROS diameters while purging them from the PDS. Masiero’s false defense of the plagiarized ROS diameters came within days of the peer review for the PDS archive in which Masiero had purged the copied ROS diameters. If his defense was valid, why purge the PDS of them? This contradiction is discussed below under How to check: Contradiction between Masiero’s public claim and purging from PDS.

19. Altering discrepant results in the PDS. My examination of the PDS archive also revealed that new values were substituted in many cases where NEOWISE results differed from those published by the earlier IRAS study. These replacement values were falsely attributed to earlier NEOWISE publications. Some of the discrepant NEOWISE results were omitted altogether. This is discussed in more below in the Notes section, under 8. Irreproducible results.

20. A model code for copied diameters is evident in the PDS. Although all copied diameters for Masiero et al. 2011 make no appearance in the PDS, nine results for six asteroids from Mainzer et al. 2011c that have copied diameters were included in the PDS archive. The results archived in the PDS contain extra information not found in the original ApJ papers — a four-letter model code that describes which asteroid parameters were obtained by fitting. The results having copied diameters are marked with the distinctive model code “-VB-” — which means, according to the PDS documentation, that the diameter was not obtained by fitting. It thus must have been set by fiat to some assumed value. This is further evidence that the NEOWISE group’s use of copied diameters was a deliberate technique — they have a model code for using copied diameters.

21. Preventing investigation from others at NASA. After my preprint was released, I sought information on the omitted modeling details that would allow replication of the NEOWISE results by filing a federal public-records request, as allowed by the Freedom of Information Act (FOIA). My request also asked for emails and other communications regarding my preprint. Although NASA went to great lengths to resist producing most of the NEOWISE records I asked for, I was given an email thread in which Tom Statler, a principle research scientist within NASA, indicated that he suspected at least some of my criticisms might be correct. Carrie Nugent, a member of the NEOWISE team, refused to give Statler access to the software that does the data analysis, calling it “proprietary code.” She also refused to give him “unpublished diameters.” Lindley Johnson, who leads NASA’s Planetary Defense Coordination Office, ordered Statler not to talk to a reporter from The New York Times who was investigating the matter.

This internal discussion shows that NASA management failed to properly investigate my claims. Johnson in particular should have sought to get to the bottom of the serious issues I had raised about NEOWISE, a program he supervises, rather than squelching internal and external inquiries. Taken together with all the other evidence, this smacks of a conspiracy to hide the details of what NEOWISE did.

It should go without saying that neither code nor unpublished diameters are the proprietary or private property of the NEOWISE group. This issue is covered in detail in an article published on the scientific misconduct site Retraction Watch.

The term “proprietary code” is diametrically opposite the spirit of open sharing of scientific results from the NEOWISE project, and it suggests that the NEOWISE group attempted to monopolize the observational data set by improperly keeping secret crucial details necessary to replicate the results.

22. Submitting false statements to the Icarus editor and reviewer. During review of my June 2018 paper at Icarus, one of the peer reviewers asked the editor to pose questions to the NEOWISE group. This is a very unusual request, but the editor complied. In addition, the editor sent the group the then-current version of the manuscript.

One of the questions was about the copied diameters and why they were not in the PDS archive. The NEOWISE group responded with a claim that the absence of the results with copied diameters was a coincidence involving how they treat asteroids for which results were presented in both Masiero et al. 2011 and Masiero et al. 2014. It is trivial to show that this claim is entirely false, and I show below how anyone can verify it themselves — see below under NEOWISE Response to: Fraudulent Backdating of Results in the PDS Archive. Lying about an issue like this during peer review of another paper is unethical.

Why the harsh tone?

Some astronomer colleagues have asked me why I have pushed so hard on this. It’s a fair question, because I have not chosen an easy path.

Ever since I can remember, science has been important to me. The scientific method is based on falsifying bad hypotheses and celebrating the ones that survive. When a scientist commits misconduct, it cheats all of us who care about science. While it is rare, it is certainly not unknown. Looking the other way when you find evidence of scientific misconduct is, to me, just as unacceptable as committing it.

Most scientific misconduct that is discovered is done by low-ranking workers within research institutions. Such cases are easy to deal with. When misconduct by a famous and powerful person is revealed, that is much more difficult to handle. Most scientists are leery of speaking up because a powerful foe can thwart investigations, block future research by manipulating the peer-review process, and interfere with grant applications.

A lot of scientists are quite reticent to discuss these matters, which tarnish the shiny reputation that science has — and deserves. Multiple colleagues in planetary astronomy have privately told me that, even though they agree with my analysis, they think that I should tone down the harsh words. One said that it was for my own good, that it would be “off-putting” to those reading my papers.

The whole discussion was a bit like talking about an unmentionable topic to Victorian age gentlemen, who would protest “Sir! A gentleman does not speak of such things!” The Victorians wouldn’t talk about a lot of things, but society has moved on from the Victorian age. We have learned that if some topics are unspeakable, that actually makes it more likely that they will occur. People will remain quiet about unmentionable behavior even when they have evidence of it. Worse still, if the very topic is considered scandalous, then anyone who musters the courage to bring the scandal to light can be viewed with suspicion — as if any association with scandal, even in uncovering it, taints those involved. I think is what was meant by this topic being “off-putting” to people reading my research.

Another colleague suggested that “such matters are best handled in private.” I almost laughed when I heard this because the situation is clearly the opposite — it could only be handled in public.

Early on, I tried to talk to the NEOWISE people in private. It didn’t work, because they would not engage. Then I tried bringing it public with a preprint on arXiv.org. But, as it turns out, the very NASA managers who should have been supervising the project were more interested in protecting it from scrutiny.

The public airing of the issues got some response, but not what I hoped. Not a single valid technical objection was raised by the NEOWISE team. Instead Amy Mainzer of JPL and Ned Wright of UCLA sent written statements or gave interviews to reporters that were laced with falsehoods. The Washington Post reported that, according to Mainzer and Wright, the Near-Earth Asteroid Thermal Model (NEATM) employed by NEOWISE does not respect the conservation of energy. That is completely absurd. The whole point of the beaming parameter eta in the NEATM is to impose conservation of energy and to thereby quantify the unobserved energy.

Say what you will about me (and they did!), but when physicists start making false statements about the conservation of energy, that shows that their response isn’t about actually communicating an error — it’s a smokescreen.

The response on the plagiarism of diameters from other works was also telling. Mainzer’s reply (as detailed below) defended a paper that I had not called into question, and just ignored the papers that I had challenged. It was a classic redirect — something we’ve come to expect from politicians, or trial attorneys but is sad to see in a scientist.

I was stunned at the arrogance that Wright and Mainzer showed. They seemed to feel secure enough in their positions that they saw no need to answer with any substance at all. They seemed to believe that they could say anything with impunity, confident that this would all blow over. But I am not so easily deterred.

The central point hammered over and again in NASA’s press release and Mainzer’s comments to reporters was that my preprint was only that, a preprint and not yet a peer-reviewed paper published in a respected journal. They suggested that it should be completely disregarded until it had been given the imprimatur of the establishment.

Their objection was truly silly when you think about it. Who needs peer review to verify a charge of plagiarism? Copied figures are identical no matter who looks at them. In addition, peer reviewed journals are about science, not about misconduct. Peer review is not appropriate for another reason — that isn’t the charter of most scientific journals. As one scientist put to me, journals publish investigations into science, not investigations of people who practice science.

When my preprint first appeared, I got some interesting emails from well-established planetary astronomers. One started off welcoming me to the club of people whose research the NEOWISE group has decided to stifle. These people told me their experiences — from being denied simple information about NEOWISE’s methods, to how the NEOWISE group had tried to kill other researcher’s papers in peer review. I was warned not under any circumstance to let them be reviewers because they would not fight fair. This was also rather telling to me — besides the misconduct I suspected, the NEOWISE team had a reputation of defending “their” territory in peer review.

So I submitted my preprint, which I later split into two papers, each of which did withstand intensive peer review. Both have now published in Icarus, the most prestigious journal of planetary science. The fact that the results were bound to be controversial made this process take vastly longer than it would have otherwise. The reviewers and editor exercised extreme care, worried about the implications. That ultimately made it a better paper, but it certainly wasn’t a quick process.

But the findings and math that were in my preprint, and that Mainzer and Wright had heaped derision upon, did hold up under all of this extra careful scrutiny. In fact, the list of scientific and data-processing errors that I spotted in the NEOWISE results only grew during peer review, as did the evidence of misconduct.

Will this be enough to get NEOWISE to actually present an explanation? It’s too early to tell, but by this point what might have been handled simply and collegially is something else entirely. So yes, it is has gotten harsh, and yes the stakes have gotten high. What could have been a very simple issue for them is now quite serious.

I didn’t push it to this place by myself. At every stage I had only two options: to drop it entirely, or to press on. I pressed on, and I don’t feel apologetic about it.

Why this matters

Arguing over the size of rocks that are typically more than 200 million miles away may seem like an esoteric topic—the 21st-century equivalent of debating how many angels can sit on the head of a pin. Does this really matter?

I think it does matter, for multiple reasons. Science is ultimately the source of innovation in our modern technological society, and it requires open and transparent communication to function properly. The scientific method is to test hypotheses against experiments and observations. Sometimes the empirical data confirm what we expect, but often they don’t—and both outcomes can advance our knowledge of the world.

When scientists surreptitiously copy results or engage in the other kinds of suspect activities that the NEOWISE team have, they cheat that process and deliver a serious blow to the integrity of the scientific process.

Integrity, openness, and transparency matter even more in science than other areas of professional life because without these, scientific results cannot be replicated and independently confirmed as genuine. We scientists must respect the integrity of the process, no matter what our subject of study.

Beyond general principles, the study of asteroids is quite important scientifically. It is a very active area of research around the world because asteroids can tell us a lot about the origin of the solar system—and because there is a 100% probability that a major asteroid impact will affect life on Earth at some point in the (possibly distant) future, just as it has multiple times in the past.

In 2011, NASA’s Dawn probe visited Ceres and Vesta, the two largest asteroids, at a cost of $446 million. The NASA OSIRIS-REx mission is currently en route to asteroid Bennu, where the plan is for it to collect a sample of the asteroid and return it to Earth for analysis. The cost of that mission is about $800 million. NASA has also approved two future asteroid missions, Psyche and Lucy, at a likely total cost of $400 million to $600 million.

Meanwhile, the NEOWISE group has proposed building and launching a new space telescope, NEOCam, to hunt for asteroids at an estimated cost of $400 million to $600 million. NEOCam competed with Psyche and Lucy for funding, but lost. The NEOWISE group has since been trying to persuade Congress to earmark funding for NEOCam, with some progress.

The Japanese space agency JAXA has also launched two asteroid probes, Hayabusa and Hayabusa 2. The European Space Agency funds asteroid research and has considered fielding asteroid missions.

Although these missions are all distinct from NEOWISE, they show that asteroid science is quite important —important enough that it’s become a multi-billion dollar “industry” within science, where research is funding is spent very carefully.

That is one of the reasons that the NEOWISE papers have been referenced by hundreds of other scientific papers. A quick search on Google Scholar shows that the most cited NEOWISE paper has 285 citations as of this writing. Four of the other NEOWISE papers have 95 to 285 citations. And a search for “NEOWISE” turns up 1440 papers referencing the project.

Each of the NEOWISE papers typically has several co-authors. All told, the work of hundreds of scientist has made use of NEOWISE data. Within planetary science, asteroids are a big deal, and so is NEOWISE.

Asteroids are also important to all of us here on Earth, non-scientists and scientists alike. We know that an asteroid killed the dinosaurs. In 1908, an asteroid impact flattened Tunguska, Siberia. And in 2013, a smaller meteor exploded in the air over Chelyabinsk, Russia. The NEOWISE results are critical to assessing the risk of future impacts, which in turn depend critically on having accurate diameter estimates. In recognition of this risk, Congress directed NASA in 2005 to identify 90% of all near-Earth objects that are 140 meters in diameter or larger by the end of 2020. That work is coordinated by the office at NASA that oversees NEOWISE.

Finally, the integrity of NEOWISE is important because it is a costly project paid for entirely by taxpayers. The total price tag for the WISE mission, on which NEOWISE is based, was at least $300 million. Spending on the NEOWISE reactivation mission has cost about $15 million since 2013. The total cost of NEOWISE is that figure, plus some portion of the WISE budget — so easily $20 million and likely much more.

The NEOWISE team consists largely of full-time government employees. Their duty is to create results that the rest of the scientific establishment can use. When they cheat, it’s no different than any other case of a powerful government employee cheating—it is a betrayal of the public’s trust, and it wastes public money.

Could there be another explanation?

From the outset, I have considered the possibility that this might not be misconduct. Indeed the default assumption one brings to any scientific publication is that scientists are open and honest.

At this stage the possibility that this is all an honest error is impossible to support. The pattern of deception continues in every communication from the NEOWISE group. If this was an honest mistake, why didn’t they just say so at the outset? Instead there has been no transparency from the NEOWISE team and a steady stream of further deception and misconduct.

At first I wondered whether the copied diameters could have occurred accidentally. However, by now three independent lines of evidence say this is not the case. As a start, Joseph Masiero says it was deliberate — and most of the occurrences are in a paper for which he was the lead author. In addition, the existence of the code -VB- shows that they had an official model code for copying.

One could propose that an early draft of the two papers that use copied diameters did have a proper disclosure of the practice, and that it was somehow omitted by accident in publishing. The direct way to check would be to look at early drafts.

However, it seems very hard to reconcile this scenario with the fact that in another paper the NEOWISE group uses a comparison with ROS diameters to criticize IRAS and the Ryan and Woodward studies (issue 12 above.) A clear disclosure that NEOWISE had copied ROS diameters rather than allowing them to be used for a similar analysis would make it very obvious how contradictory they were being.

Even if one supposes that the NEOWISE group did properly reference their copying of the diameters, one must then grapple with the fact that Masiero made all of the copying disappear from his own papers as recorded in the PDS archive. This included making repeated false statements within the PDS archive saying that the material was due to “original fitted diameters.” Worse, it included taking new results that had never previously been published and retroactively attributing them to past papers. If the NEOWISE team really thought that the copied diameters were valid, why retroactively purge them? Why try to alter the past?

Why would they do this?

Why would a successful scientific team engage in such behavior? That is always is hard to fathom, but it nevertheless does occur. Cases like Diederik Stapel and Jan Hendrik Schon have shown that even researchers who are at the top of their field have been guilty of misconduct. They were both smart capable people who surely could have had successful careers without the fraud, so why do it?

Without access to the team’s working drafts and communications with other scientists outside NASA we can only speculate on possible motives. One scenario in particular seems consistent with the evidence in hand, and with prior cases of misconduct by other scientists: it could have been driven by ambition and a desire to be seen as the best in the field, coupled with some basic incompetence. It’s a bad combination.

The NEOWISE project set very high goals for itself, claiming accuracy for diameters that is better than 10% — twice as good as previous thermal-modeling efforts had claimed. This claim, initially made in their 2011 papers, is echoed all the way through a 2015 review paper with Amy Mainzer as its first author. They may have set this accuracy goal up front, then made questionable choices to ensure they hit it.

It is unclear whether the NEOWISE data set, or asteroid thermal modeling more generally, could ever achieve the accuracy claimed. But it was certainly not possible given that the NEOWISE team made some basic scientific and statistical errors from the outset. They dealt with reflected sunlight in a way that ignores Kirchhoff’s laws. They did not realize that the flux uncertainties create by WISE pipeline dramatically underestimated the true error in the fluxes. These issues are covered in detail in my Icarus papers and are also described below.

They very likely tried to use minimum chi-squared fitting. This is a very common technique in science, but it is doomed to failure if your individual measurement errors are too small. Which they were in this case — the WISE pipeline flux errors are too small by factors of 2.5 to 1.3 depending on the band. In addition, they did not seem to realize that variations in flux caused by the asteroids not being perfectly spherical also contribute to the effective error for the minimum chi-squared fitting. A different statistical method would have helped, but they did not appear to be very statistically sophisticated (for example, they use only 25 trials in their Monte Carlo error analysis, far too few for a meaningful result).

The pattern of unconventional and scientifically unjustified steps they took in data analysis, all cataloged in my Empirical paper in Icarus, suggests that they were very concerned with making their project look successful, but were running into problems. In order to reduce the variation, they chopped the data up into arbitrary 3- to 10-day epochs. They invented rules which had the effect of discarding even more data. They appear to have put constraints on their analysis to eliminate bad looking results.

Note that it is clearly documented in their papers that they did these things — that isn’t in question. I am speculating here as to why they did them. It certainly seems suspicious that their ad hoc data analysis rules had the effect of limiting the scope and variation within the data that they analyzed.

Meanwhile, they were on a tight schedule to produce results. The WISE mission flew in 2010, and the world expected results to be published soon. Indeed NASA funding may have required it. The series of errors in their scientific understanding and statistical data analysis made it very difficult to achieve the ambitious goals they seem to have set for themselves.

For example, they sharply criticized two previous studies, IRAS and modeling by Ryan and Woodward, by comparing them to radar, occultation, and spacecraft (ROS) estimates of diameter. Comparing to ROS estimates is the only way to get an independent assessment of the diameter estimation accuracy.

Strikingly, rather than compare NEOWISE results in the same way that they did the other studies, they adopted a bizarre approach that is essentially backwards. Rather than see if their diameter estimates match ROS, they calculated fluxes from hypothetical ROS and estimated whether they match observed flux. Then they made little more than a hunch that this would allow them to infer diameter accuracy. As shown in my June 2018 Icarus paper, this is not a valid way to compare diameters. Why use this backward approach?

The simplest explanation is that the comparison to ROS was not favorable to the actual model-fit estimates for the asteroids for which ROS estimates were available — i.e., the comparison failed to meet their accuracy goals. We don’t know what the actual comparison was, because of the plagiarism. But it seems illogical to hide good results with plagiarism. So I presume that the actual diameters were estimated in the same way all of the other results were—embarrassingly badly

Rather than publish NEOWISE diameter estimates that showed they were inaccurate, I believe that they copied the ROS diameters and did not publish what their own methods would give. They did this for essentially all asteroids with ROS diameters available at the time of their publication in 2011.

With that move, they probably felt secure. If anybody checked they would see excellent accuracy. No external group could prove that the true NEOWISE estimates of diameter for these asteroids had bad accuracy because they could not replicate the results.

As shown below, it is trivial to pick out the copied ROS diameters from the actual NEOWISE results because the ROS diameters are typically rounded to the nearest km, while NEOWISE presents to the nearest meter. The copied ROS diameters stick out because they end in “.000”.

It would have been far harder to detect the fraud had they made up diameters with random digits in the last three places. I am speculating here, but I think that shows that the NEOWISE team was not fully fraudulent in the beginning. Making up diameters was a step too far. Instead they copied ROS diameters and told themselves that if it was ever discovered, they could try to fall back on some convoluted argument that this was scientifically justified. Indeed that is exactly what Masiero did, as shown below under NEOWISE Response 2 to: Plagiarism of ROS Diameters.

The diameter plagiarism and the invalid backwards accuracy analysis was coupled with aggressive claims that their results were good without presenting the direct comparison to ROS that would back up that claim. Peer review should have caught this but didn’t. One reason may be that the analysis and claims were spread across multiple papers, so it wasn’t the same reviewer. I have no way to know what happened in their review process, but it clearly missed the issue. That isn’t too surprising — peer review is not intended to catch outright fraud or misconduct. They also wrote their papers in a way that looked complete enough to satisfy peer review yet did omitted crucial details that others would need to replicate their results — even those diameters that they had not copied.

Many scientists I have spoken to have told me that the NEOWISE group refused to answer questions necessary for replication. Their apparent policy of refusing to answering questions from other scientists attempting replication of their work is consistent with this strategy; a wall of secrecy would protect their dissembling about the accuracy of their results.

Unable to replicate the NEOWISE results, other scientists were forced to either accept them on blind faith or take the risky step of challenging them publicly. All but me took the safer route, hesitating to publish papers that tried to re-analyze the NEOWISE observation data in competition with the NEOWISE group. One senior scientist told me outright that he just gave up on using the NEOWISE data set — he couldn’t reproduce the NEOWISE results, and he wasn’t confident enough to challenge the NEOWISE group.

This protected their accuracy claims. The strategy of secrecy also allowed the NEOWISE group to exert a monopoly on writing papers using the observational data, long past the point when other astronomers should have been using it. It is very hard to use a data set if you can’t replicate the key publications associated with it. A scientist in that position must first worry whether the lack of replications means there is a complexity the don’t understand. Then to actually push forward they must challenge the NEOWISE group head on. It’s easier and safer to find something else to work on.

Around the time all of this was going on, the NEOWISE group was advocating for a major new space telescope project, called NEOCam, and seeking $400 million to $600 million in funding from NASA to get that started. A big selling point of NEOCam is that it would be run by the team that was successful with NEOWISE. A scandal over NEOWISE could easily scuttle their ability to sell this huge project. NEOCam had made it onto a short of list projects that were up for NASA Discovery mission funding when I first found the problems with NEOWISE.

Under these circumstances, the NEOWISE group appears to have made the calculated decision that was better to deny everything than to address the issues I raised — or to admit any mistakes at all.

The problem with this approach is that the longer you deny things, the worse it gets. As is often the case, the cover-up is worse than the crime. Had I given up, however, the strategy would have worked. Indeed all they really needed to do was to kick the can down road long enough that NEOCam would get funded.

Ultimately NEOCam failed to win Discovery project funding on two different occasions. It nevertheless managed to stay viable by getting a smaller amount of funding to continue incremental work. Now the NEOWISE team’s hopes hang on Congress writing specific line-item funding into the NASA appropriations bill — a kind of end-run around the competitive programs, like Discovery, that NASA runs to compare the scientific merits of competing proposals.

The facts of misconduct I have laid out speak for themselves. But I think it helps to put these in context to understand how this could be a case of scientists, under pressure to produce for NEOWISE and to be chosen for NEOCam, overreaching in their scientific work. We have seen many examples of just this kind pressure leading to scientific misconduct in the past. That doesn’t excuse it, but it can help explain it.

Although this account fits the observed facts that I am aware of, there are clearly many facts of which I am not aware. The true story could be quite different from what I’ve speculate here. My hope is that NASA officials or others in government, as well as others in the astronomical community, will fully investigate the matter and release the full set of facts so that we can ensure problems like this don’t recur in the future.

Simple ways to verify the misconduct yourself

An arcane argument between specialists is difficult for others to follow. Some of the arguments that I make rely on complicated calculations that are contained in my two Icarus papers. However the misconduct issues are actually very simple to verify — the biggest one is just copying, after all.

Each of the key issues are handled in a section below. With a few web clicks, you can see proof of what I have found. The key claims can all be verified with an internet browser.

The data files are text files that would be easy to read were it not for the fact that some are extremely large — much larger than the size that is supported by typical text viewing or editing software. To verify my claims, the first few lines are usually enough, and this gives proof of the misconduct.

However, if you want to check every asteroid, you will need to go deep into the data files. This requires software that can handle huge text files. There are many solutions to this problem, but my favorite is a text editor called EmEditor which is a free download. It is extremely fast and easy to use for large text files. Asteroids with a short enough number are listed by that number in the left most column. However for asteroids with bigger numbers, or asteroids with non-numerical (provisional) designations it requires decoding the name which is in MPC packed designations.

Each of the relevant original NEOWISE papers were published in the Astrophysical Journal (ApJ) and are open access. PDF files for each of the key papers, and where to download them are listed here in this format:

Short citation: ApJ volume, page (PDS abbreviation)

URL of Paper

Masiero et al. 2011: ApJ 741, 68 (Mas11)

https://doi.org/10.1088/0004-637X/741/2/68

Masiero et al. 2014: ApJ 791, 121 (Mas14)

https://doi.org/10.1088/0004-637X/791/2/121

Mainzer et al. 2011a: ApJ 736, 100 (n/a)

https://doi.org/10.1088/0004-637X/736/2/100

Mainzer et al. 2011b: ApJ 737, L9 (n/a)

https://doi.org/10.1088/2041-8205/737/1/L9

Mainzer et al. 2011c: ApJ 743, 156 (Mai11)

https://doi.org/10.1088/0004-637X/743/2/156

Note that there three Mainzer et al. papers in 2011 with the same list of authors and very similar titles, so it is common to refer to them by their ApJ volume and page numbers — for example, ApJ 736, 100.

The PDS archive of NEOWISE results contains references to papers that have published results, with abbreviations that are shown above in parentheses. The papers noted as “(n/a)” did not include asteroid results.

Below is a section for each of the most important examples of misconduct, prefaced by “How to check:….” In addition, I have presented sections titled “NEOWISE response:…,” in which I discuss the answers that the NEOWISE team has given — not directly to me, but in communications to the Icarus editor, in press interviews, or in written statements to the press or for public release.

How to check: Plagiarism of ROS diameters

The main NEOWISE result paper, which contains over 90% of the results, is Masiero et al. 2011, available in PDF or HTML.

The original table is reproduced above and also available at http://iopscience.iop.org/0004-637X/741/2/68/suppdata/apj398969t1_lr.gif

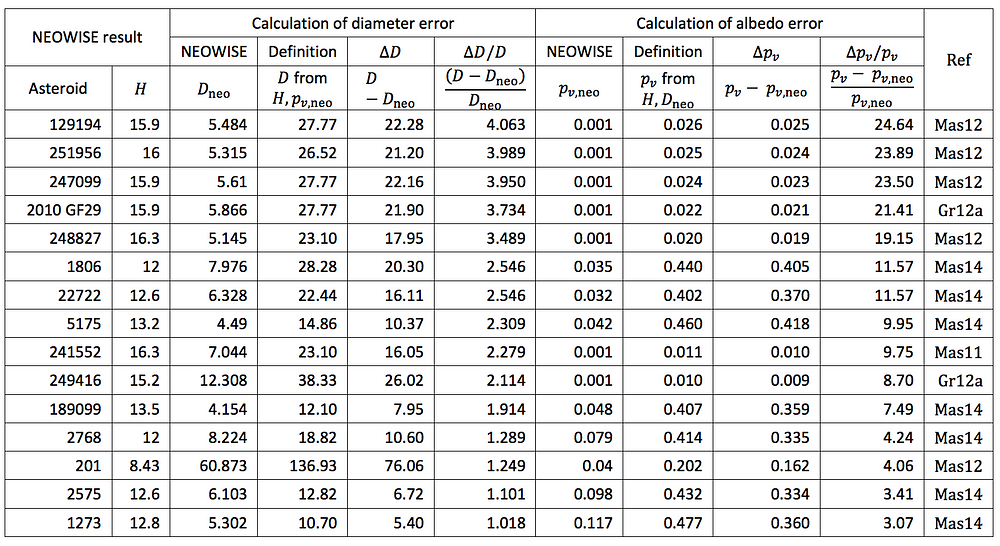

In the reproduced table above, I have drawn red and green boxes around some of the asteroid entries. Note that the diameters in the red boxes, which are in units of kilometers, all end in “.000.” In contrast, the diameters for asteroid highlighted by the green box include digits all the way out to the nearest 0.001 km, which is the nearest meter.

Note also that some asteroids, such as asteroids 00002 and 00009, appear on multiple rows because NEOWISE produced multiple results for some asteroids.

The diameters boxed in red above — as well as more than 100 others not shown here — are exactly equal, to the nearest meter, to ROS diameters published in papers well before the NEOWISE studies.

The supposed NEOWISE results aren’t NEOWISE results at all — they were directly copied!

You can check this yourself. Asteroid 2 (also known as Pallas) has a diameter given as 544.000 km in the table above from Masiero et al., 2011. Compare it to the entry for that asteroid in Shevchenko and Tedesco, 2006, one of the ROS papers, which is available at http://www.sciencedirect.com/science/article/pii/S001910350600128X.

In Table 1 of the Shevchenko paper we find this:

Here, column Docc lists diameters determined by the occultation method. In the case of asteroid 2 Pallas (aka 00002), that value matches the Masiero value exactly. Turning to another ROS source, Durech et al. 2011, available at http://www.sciencedirect.com/science/article/pii/S0019103511001072 or http://arxiv.org/abs/1104.4227, we find the following table.

Check out the value for the diameter of asteroid 5 Astraea (aka 00005): it is 115 km, exactly equal to the diameter listed in the Masiero Table 1 above. You may also notice that asteroid 2 Pallas is also in this table, with a different diameter than in the Shevchenko or Masiero tables. That is typical; one almost never gets the same number from these ROS studies, mainly as a result of measurement error.

Altogether there are more than 100 asteroids that I have found that had a diameter from a previous ROS study copied and presented in a table of NEOWISE fits.

In my June 2018 paper, this is covered in Section 4, and a full table of all of the copied diameters, along with their original sources, is given in Table 8 and Table S1 of the Supplementary Information.

NEOWISE response 1 to: Plagiarism of ROS diameters

In a written statement about my paper sent to reporter Ken Chang for The New York Times, Dr. Mainzer said:

In Mainzer et al. 2011 Astrophysical Journal 736, 100, radar measurements were used as ground truth in order to compute model WISE and visible fluxes, which were compared to the measured fluxes and found to be in good agreement (Figure 3). This is how the WISE flux calibration for asteroids was validated. The paper reported the radar diameters, because these were held fixed so that the predicted WISE fluxes could be computed. The caption for Table 1 of the paper states “The diameters and H values used to fit each object from the respective source data (either radar, spacecraft imaging, or occultation) are given.”

And in a statement given to reporter Eric Hand for Science, Dr. Mainzer reiterated, referring to section 4.3 of the preprint, which discusses the copying of ROS diameters:

The paper mischaracterizes the use of the radar/occultation/flyby diameters. In Mainzer et al. 2011 ApJ 736, 100 , the NEOWISE team uses these diameters as calibrator targets to compute model brightnesses and compare them to the measured brightnesses for the objects. They are in excellent agreement. The radar/occultation/flyby sources are cited in this paper; later papers reference this calibration paper.

These “explanations” are quite deceptive. I did not “mischaracterize” the ApJ 736, 100 paper — indeed it is not the paper at issue at all!

The full title of the ApJ 736, 100 paper is “Thermal Model Calibration for Minor Planets Observed with Wide-Field Infrared Survey Explorer/NEOWISE.” In this paper, Mainzer and her coauthors do use the ROS diameters for — in that paper — to “calibrate” the colors and other aspects of their thermal model.

Their goal is to make sure when they calculate their color correction (to adjust to the properties of the WISE sensor) that they get roughly the same observed IR flux from the test objects using the ROS diameters as they see from the asteroids they represent. This the “backwards” method of determining diameter estimation accuracy alluded to above. It is discussed in depth in my papers.

To do this, the ApJ 736, 100 paper compares the observed flux (IR brightness) from a set of 48 asteroids to the flux calculated from a hypothetical model asteroid with a ROS diameter. Again, the use of hypothetical test objects for calibration purposes in the ApJ 736, 100 paper is not at issue here. The ROS studies that provide the diameters are mostly referenced, but even in cases where they do not correctly reference the source, it is clear that the diameters are from ROS sources.

The problem instead occurs in the papers that purport to publish NEOWISE results: the Masiero et al. 2011 study (aka Mas11) and the Mainzer et al. 2011c study (aka Mai11). In those papers, the diameters match ROS figures for more than 100 asteroids — far more than the 48 hypothetical test objects in the ApJ 736, 100 paper. There is no explanation in those papers that diameters were copied, let alone a justification for why it was done. Nor can I imagine any justification that would pass scientific muster.

It is quite deceptive to answer a clear claim of misconduct in the Mas11 and Mas14 papers with a rousing defense of the ApJ 736, 100 paper, which wasn’t accused at all. Dr. Mainzer apparently assumed that the New York Times, and its readers, would be satisfied with a scientific sounding excuse for an entirely different paper. Indeed some journalists did buy this argument and wrote articles in support of NASA.

These flagrant attempts to duck the issue are why I am writing this document to explain things — so that it is harder to mislead the press, the public at large, or other scientists with spurious non-answers.

A second and independent argument advanced by NEOWISE appears next.

NEOWISE response 2 to: Plagiarism of ROS diameters

In my original 2016 preprint and interviews given to The New York Times and other journalists, I was not sure whether the copying of diameters was deliberate. However, Joe Masiero soon cleared that up in a post he made on the Yahoo MPML (minor planets mailing list)

In May 27, 2016, on the Yahoo minor planets forum, Joseph Masiero of JPL was in a discussion thread about my preprint with Jean-Luc Margot, an astronomy professor at UCLA. Margot asked Masiero about the copying of ROS diameters and presenting them as NEOWISE results. Masiero replied as follows:

32047

Re: {MPML} Challenge to Asteroid Size Estimates

Joe Masiero

May 27, 2016Hi Jean-Luc,Regarding the diameters, as explained in the first thermal model calibration paper (Mainzer et al. 2011 ApJ 736, 100), the radar diameters were held fixed and were used to derive fluxes to verify the color correction calibration. We quote those fits in that paper, as well as the later MBA and NEO papers, both of which reference the 2011 ApJ 736, 100 paper in this respect. As described in this paper, if the radar or occultation diameters were available, they were used since they allow for improved solutions for the other free parameters such as beaming, and the thermal model calibration paper was cited (see e.g. Section 3 of Mainzer et al. ApJ 2011 743, 156). For more information, you can consult the 2011 ApJ 736, 100 paper.

…

The note on the Yahoo board is clearly a professional correspondence between scientists that is “on the record” and published publicly. The key point for our present topic is that Masiero confirms that this practice was entirely deliberate and very clearly not an accident. He defends it as being a legitimate and proper course of action.

We can consider each of the justifications given by Masiero in turn.

The first justification for using the copied ROS diameters is that “they allow for improved solutions for the other free parameters such as beaming.”

The claim that their goal was a better estimate of the beaming parameter or other free parameters is undercut by the fact that the primary goal of their papers is to determine diameter and visible-band albedo (which is a direct consequence of diameter). Indeed the Mas11 paper claims this in its abstract:

Using a NEATM thermal model fitting routine, we compute diameters for over 100,000 Main Belt asteroids from their IR thermal flux, with errors better than 10%.

There are no claims or mentions made about better solutions of the beaming parameter for these asteroids.

An even more basic objection is this — having “better” beaming parameter results only matters if it is disclosed to the readers of the paper. The idea that more than 100 results have a better beaming parameter, but it is never mentioned either which results have this, or even that any results have this feature, means that it is unless to any other scientists.

The argument that this is a carryover from the ApJ 736, 100 paper is plainly false. Numerically this can’t possibly be true. The ApJ 736, 100 papers studies 50 objects; 48 asteroids and two moons. Yet the copying affects at least 105 asteroids.

Masiero states that the other NEOWISE papers reference the ApJ 736, 100 paper “in this respect,” meaning with respect to using the results based on copied diameters from the ApJ 736, 100 paper. He specifically cites section 3 of Mainzer et al. 2011 ApJ 743, 156 (or Mai11). This paper also uses copied ROS diameters for nine results covering six asteroids.

In the Mai11, ApJ 743, 156 paper, there are 4 mentions of the ApJ 736, 100 paper (called Mainzer et al. 2011b or M11B in the references for that paper). Here are all four of them for your convenience. In each case, I have quoted the full sentence that the reference is in.

To verify the excerpts above one can simply search in the PDF of the Mai11 paper for mentions of “M11B.”

None of these quotes makes any mention of using ROS diameters. Nor is there any mention of better estimates of the beaming parameter. It is simply not the case that the Mainzer et al. ApJ 743, 156 paper references the ApJ 736, 100 paper (aka M11B) “in this respect.” Instead the references to that paper are strictly limited to methodology of WISE magnitudes (first quote), the use of thermal models NEATM, STM, FRM none of which are conventionally understood to be based on use of copied diameters (second quote), the use of the H-G phase system (third quote), or the conclusion that the diameter error is ~10% (fourth quote).

OK, having checked the Mainzer et al. ApJ 743, 156 paper, what about the Masiero et al. 2011 paper itself? In that paper, Mainzer et al. ApJ 736, 100 is referenced as Mainzer et al. 2011b. Here are all seven of the sentences which reference that paper.

To verify the excerpts above, one can simply search in the PDF of the Masiero et al. 2011 paper for mentions of “2011b.”

Not one of these references to the ApJ 736, 100 paper makes any mention that diameter from ROS studies were copied rather than being due to model fitting, nor does any other part of section 3, or the rest of the paper.

The key takeaway here is that Masiero admits that the copying of ROS diameters was intentionally done, but he gives a very dubious answer as to why it was done. He is also entirely untruthful in claiming that this was properly referenced.

Masiero et al. 2011 copied diameters for at least 99 asteroids (Mainzer et al. 2011 ApJ 743, 156 did another 6 asteroids). Results with copied diameters were intermingled in Table 1 and in the much larger data table with cases where the diameter was set by thermal modeling. No way was given to tell which is which.

Masiero asks us to believe because the Mainzer et al. ApJ 736, 100 paper uses the ROS diameters for 48 asteroids for a calibration and accuracy analysis, that gives him license to copy the diameter for those, plus another 51 asteroids and present them as modeling results, interspersed with results that have diameters fit by modeling. Further, it is okay that these results are not marked or denoted in any way; instead, every indication is given that they are thermal-modeling results. The 48 asteroids are identified by name in the Mainzer et al ApJ 736, 100 paper, but the other 51 are not identified anywhere.

This is a clear case of plagiarism — a quite serious form of scientific misconduct. However the larger purpose is not simply to copy the work of others, but also to make NEOWISE appear to be very accurate.

How to check: Fraudulent backdating of results in the PDS archive

In 2016 Masiero and colleagues published a compilation of data from previous NEOWISE studies in the NASA PDS (planetary data system) as NEOWISE V1.0: https://sbn.psi.edu/pds/resource/neowisediam.html. The main result file is neowise_mainbelt.tab which is available here: https://sbn.psi.edu/pds/asteroid/EAR_A_COMPIL_5_NEOWISEDIAM_V1_0/data/neowise_mainbelt.tab.

The file is huge, but a browser will successfully open it, but not format it very well. The simplest thing to do is to open in a browser and just copy the first chunk of the file to a text editor or word processor. Here is what you will see at the top of the file.

2,”- “,”00002 “, 4.06,+0.11,2455403.4552245, 11, 11, 11, 0,”DV-I”,642.959, 13.759,0.101,0.008,0.085,0.013,1.200,0.023,”-”,”Mas14"2,”- “,”00002 “, 4.06,+0.11,2455236.5689880, 11, 11, 8, 0,”DV-I”,610.818, 49.581,0.113,0.021,0.101,0.022,1.200,0.139,”-”,”Mas14"5,”- “,”00005 “, 6.85,+0.15,2455392.0389605, 9, 13, 13, 13,”DVBI”,108.293, 3.703,0.274,0.033,0.365,0.030,0.867,0.101,”-”,”Mas14"6,”- “,”00006 “, 5.71,+0.24,2455359.6921615, 14, 14, 14, 13,”DVBI”,195.639, 5.441,0.240,0.044,0.346,0.028,0.913,0.091,”-”,”Mas14"8,”- “,”00008 “, 6.35,+0.28,2455347.9179510, 15, 17, 16, 17,”DVBI”,147.491, 1.025,0.226,0.041,0.378,0.038,0.808,0.011,”-”,”Mas14"9,”- “,”00009 “, 6.28,+0.17,2455382.4805565, 15, 15, 14, 15,”DVBI”,183.011, 0.390,0.156,0.029,0.330,0.012,0.860,0.013,”-”,”Mas14"9,”- “,”00009 “, 6.28,+0.17,2455213.5454400, 10, 10, 10, 10,”DVBI”,184.158, 0.899,0.158,0.012,0.337,0.036,0.784,0.004,”-”,”Mas14"10,”- “,”00010 “, 5.43,+0.15,2455316.1308670, 11, 11, 6, 0,”DV-I”,533.302, 42.541,0.042,0.009,0.048,0.009,1.200,0.168,”-”,”Mas14"11,”- “,”00011 “, 6.61,+0.15,2455270.6805650, 9, 10, 9, 10,”DVBI”,142.887, 1.008,0.191,0.021,0.351,0.028,0.784,0.016,”-”,”Mas14"12,”- “,”00012 “, 7.24,+0.22,2455234.4565780, 17, 19, 14, 21,”DVBI”,115.087, 1.199,0.163,0.027,0.321,0.024,0.837,0.022,”-”,”Mas14"13,”- “,”00013 “, 6.74,+0.15,2455275.0157195, 0, 4, 4, 8,”DVB-”,222.792, 7.870,0.070,0.008,0.105,0.011,0.900,0.027,”-”,”Mas11"

Here are the things to notice. For asteroid 2, there first result is

2,”- “,”00002 “, 4.06,+0.11,2455403.4552245, 11, 11, 11, 0,”DV-I”,642.959, 13.759,0.101,0.008,0.085,0.013,1.200,0.023,”-”,”Mas14"The description of each column is in the file neowise_mainbelt.lbl: https://sbn.psi.edu/pds/asteroid/EAR_A_COMPIL_5_NEOWISEDIAM_V1_0/data/neowise_mainbelt.lbl, which is not very easy to read, but it tells us that the diameter is in column 12, so for the one above it is 642.959 km. The last column definition, column 21, is described as this (emphasis added):

COLUMN_NUMBER = 21 NAME = “REFERENCE” DESCRIPTION = “Reference to the original publication of fitted parameters.”“Mas14” is the abbreviation for the Masiero et al. 2014 above. But asteroid 2 never appeared in the original publication! That data table is shown above, and it does not have it. So the PDS archive has two results for asteroid 2 that were never in the original paper, but now have been added and retroactively attributed to the paper.

There is a good reason that asteroid 2 wasn’t in the Masiero et al. 2014 paper. That paper requires that there are observations in each WISE band. Columns 7 to 10 in the PDS give the number of observations in each band. There are zero observation in the W4 band. If you check in the results in the Masiero et al. 2011 paper above, you see that is true there. No other results for the Masiero et al. 2014 paper as originally published have a zero observation count in W4.

Meanwhile, the results for asteroid 2 from Masiero et al. 2011 (abbreviated as Mas11 in PDS) are simply missing. The same thing happens for the copied results for asteroids 5, 6, 8. There should be a Mas 11 entry for each of them, but it is missing. The Mas14 result is all that is there.

Asteroid 13 is a different story. That asteroid is not in Masiero et al. 2014, and it wasn’t added retroactively the way asteroid 2 was. Instead the PDS contains this:

13,”- “,”00013 “, 6.74,+0.15,2455275.0157195, 0, 4, 4, 8,”DVB-”,222.792, 7.870,0.070,0.008,0.105,0.011,0.900,0.027,”-”,”Mas11"This shows a diameter of 222.792 km, while the actual Masiero et al. 2011 paper shows a copied diameter of 227.000 km. Yet the PDS entry cites “Mas11” as its source for this entry. The PDS archive has effectively altered results, misrepresenting them as previously peer-reviewed and published in Masiero et al. 2011, a paper published five years earlier.

The same occurs for all of the asteroids that I have identified as having copied ROS diameters that appeared in the Masiero et al. 2011 paper. They have all either been deleted or replaced by a new result that is falsely and retroactively attributed to the paper.

This was not disclosed in the NEOWISE PDS archive documentation. This file contains the overall catalog description of the NEOWISE archive, in the file dataset.cat: https://sbn.psi.edu/pds/asteroid/EAR_A_COMPIL_5_NEOWISEDIAM_V1_0/catalog/dataset.cat.

The first sentence of the description is this (emphasis added):

This PDS data set represents a compilation of published diameters, optical albedos, near-infrared albedos, and beaming parameters for minor planets detected by NEOWISE during the fully cryogenic, 3-band cryo, post-cryo and NEOWISE-Reactivation Year 1 operations.The PDS system has a formal way to designate results that only come from prior results rather than being new. The “PUBLISHED_LITERATURE” designation is specifically used for INSTRUMENT_HOST, as is explained in the file publithost.cat: https://sbn.psi.edu/pds/asteroid/EAR_A_COMPIL_5_NEOWISEDIAM_V1_0/catalog/publithost.cat. Here is a quote from it (emphasis added):

The PUBLISHED_LITERATURE designation for INSTRUMENT_HOST is used when the data presented in a given data set were collected entirely by referencing results published in the literature. The data set description or product labels will provide the explicit references, which in turn should provide details of the original observing campaigns, data reduction, or criteria for inclusion for each datum reported in the PDS data set.The importance of the two quotes above is that they, along with the “REFERENCE” DESCRIPTION in column 21, clearly tell the user of the PDS archive that every datum is identical to the original publications. Only that isn’t true.

NEOWISE Response to: Fraudulent backdating of results in the PDS archive

During review of my June 2018 paper at Icarus, one of the peer reviewers asked editor Will Grundy to pose questions to the NEOWISE group. In addition, Grundy sent them the then-current version of the manuscript of the paper, so they had context for answering the questions.

One of the questions was about the copied diameters and why they were not in the PDS archive. The NEOWISE group responded as follows (text coloring and formatting retained from the original):

(The remainder of the message is not relevant to the issue considered here.)

The Masiero et al. 2014 paper re-analyzed about 3,000 asteroids that had originally appeared in the Mas 11 paper (see section 2 of Empirical paper), using a different method.

The argument made above by the NEOWISE response is that it is simply a coincidence that the results that have copied diameters were eliminated. They say that the 3,000 or so asteroids in Mas14 tend to be the larger and closer asteroids which also have a ROS diameter. Implicit in their argument is that it is valid to drop the Mas11 results whenever there is a Mas14 result because the newer paper supersedes the older one.

Unfortunately none of this is true on multiple grounds.

- It is not appropriate to DELETE the Mas11 results and only include the Mas14 results. One of the reasons to have fitted results for ROS asteroids is to do the comparison between the ROS diameter and the NEOWISE fitted diameter. Mas11 and Mas14 have different models (as clearly stated in the passage above). So if you compare ROS to Mas14 you will get an error estimate, but that won’t tell you about the error for the ~130,000 asteroids in Mas11 which were calculated with its model. In Mas11 the NEOWISE group made it impossible to check their accuracy by copying the diameters for every ROS asteroid available at the time. Now in the PDS they make it impossible to check by deleting those results and leaving only the different model results from Mas14. Yet that is what they did in most case — but interestingly, not in all cases.

- Mas14 was NOT chosen to supersede Mas11 in all cases! The passage above implies that across the board, whenever there was a Mas14 result for an asteroid, that was chosen over the Mas11 value. Except that is not true! There are 102 asteroids in the PDS that appear in both the Mas11 and Mas14 results!

Here is a list of the asteroid numbers for which results appear in both Mas11 and Mas14:

717, 876, 882, 883, 918, 1003, 1299, 1429, 1432, 1536, 1568, 1573, 1738, 1802, 1936, 1942, 1970, 2033, 2079, 2111, 2123, 2124, 2125, 2144, 2242, 2275, 2316, 2344, 2401, 2432, 2463, 2558, 2567, 2788, 2908, 2947, 2984, 3157, 3162, 3411, 3519,3537, 3544, 3609, 3698, 3755, 4061, 4066, 4093,4150, 4164,4252, 4256, 4615, 4645, 4742, 4909, 4913, 5035, 5333, 5525, 5527, 5574, 6045, 6079, 6091, 6101, 6164, 6838, 6924, 6961, 8941, 9192, 10175, 10259, 10325, 10419, 11029, 12376, 13788, 14950, 15549, 15844, 15988, 16184, 17065, 17569, 18429, 18640, 19538, 20423, 20489, 20963, 21594, 22253, 29931, 30545, 31762, 31784, 38504, 63157, 94346Here are the records in the PDS data file neowise_mainbelt.tab. for the first example, asteroid 717 as they appear if viewed in EmEditor or another application:

717,”- “,”00717 “,11.10,+0.15,2455389.9543170, 10, 10, 11, 10,”DVBI”, 27.294, 0.444,0.086,0.009,0.192,0.027,0.676,0.012,”-”,”Mas14"717,”- “,”00717 “,11.10,+0.15,2455223.2073495, 8, 0, 10, 10,”DVBI”, 27.656, 0.202,0.084,0.015,0.207,0.015,0.670,0.003,”-”,”Mas11"

It is thus wrong to imply that across the board Mas14 results were chosen over Mas11 results. This shows that claim of the passage quoted above is FALSE.

In the result above, the Mas14 result is a diameter of 27.294 km, with a sigma of 0.444 km. The Mas11 result actually has a smaller sigma — of 0.202 km. Could its smaller (claimed) error be why it was retained in the PDS?

Here are the records in the PDS data file neowise_mainbelt.tab for the second asteroid on the list, asteroid 876:

876,”- “,”00876 “,10.89,+0.15,2455374.2133600, 13, 14, 14, 14,”DVBI”, 22.420, 0.142,0.155,0.034,0.154,0.012,0.847,0.019,”-”,”Mas14"876,”- “,”00876 “,10.89,+0.15,2455209.2443270, 11, 0, 11, 11,”DVBI”, 22.554, 0.390,0.153,0.014,0.179,0.007,0.951,0.033,”-”,”Mas11"

In this case we see that the Mas11 result for 876 has been kept in the PDS even though it has a sigma of 0.390, which is more than 2.7 times larger than the sigma of the Mas14 result (0.142). Why keep the Mas11 result that is less accurate (by their measure), if the goal is to choose the latest and greatest versions from Mas14? Apparently the magnitude of the estimated error was not the criterion used to choose what to keep and what to discard.

- It is false that all of the asteroids with copied ROS diameters are in Mas14. The passage above claims that “all of the objects that have radar detections also satisfied they criteria for 4-band detection, and thus were revised with the improved thermal modeling method.”

In actual fact, there are 12 asteroids that have copied ROS diameters in Mas11 but that do not have a counterpart in Mas14. They are these asteroids:

13, 36, 46, 53, 54, 84, 105, 134, 313, 336, 345, 2867As shown above, the PDS result for asteroid 13 has been modified from the original publication Mas11 in ApJ. Yet it was attributed to Mas11 in the PDS.

It is also clear why asteroid 13 was not in Mas14 — as the NEOWISE group clearly states in their reply to the editor, Mas14 is limited to asteroids which “had high SNR in all four WISE bands.” Yet as shown above, the numbers 0, 4, 4, and 8 are the counts of observations in each band that were used for the result. Since there is a zero in the W1 band for asteroid 13, it couldn’t be in Mas14 by the standards discussed in the passage above — it is missing a band!

So for the 12 asteroids, their original published result “disappeared,” in the terminology used in the response to the editor.

- Asteroid 2 Pallas had its Mas11 result deleted, and a new result was retroactively attributed to Mas14. This has already been demonstrated above.

- Asteroid 522 is simply deleted. Here is an excerpt from the top of the Mas11 data file, which is available at http://iopscience.iop.org/0004-637X/741/2/68/suppdata/apj398969t1_mrt.txt

00521 8.310 0.06 110.628 2.956 0.0684 0.0129 0.930 0.020 0.0629 0.0063 11 11 12 1100522 9.120 0.15 83.700 4.850 0.0572 0.0133 0.841 0.036 0.0887 0.0135 9 9 9 1000524 9.830 0.15 64.107 1.878 0.0323 0.0057 0.941 0.020 0.0484 0.0085 13 13 12 13

You can see that asteroid 522 has a copied diameter of 83.7 km.

Asteroid 522 does NOT appear in Mas14 despite having 9 to 10 observations in all four WISE bands. Here is an excerpt of the Mas14 data file, available from http://iopscience.iop.org/0004-637X/791/2/121/suppdata/apj498786t1_mrt.txt:

00521 107.23 0.49 0.87 0.01 0.07 0.02 0.07 0.01 8.3 0.0600524 65.53 0.96 0.91 0.04 0.05 0.01 0.05 0.01 9.8 0.15

It does not appear in the PDS archive file neowise_mainbelt.tab, excerpted here: